Article by Paul Melcher, appeared on Kapture blog.

A couple of research papers were recently published, both touching on advancement in computer vision and machine learning. While research papers are a common occurrence in this field, these two are worth reviewing a bit deeper, as their implication will have a wide impact once they mature.

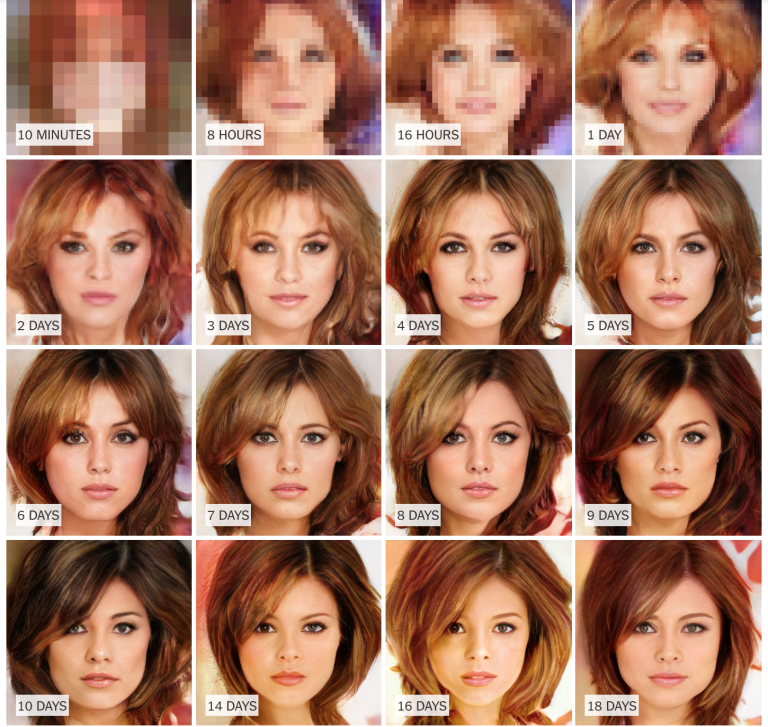

The first is a research organized by Nvidia for presentation at the upcoming International Conference on Learning Representation to be held in Vancouver this coming April. The purpose is to build a more efficient GAN by growing both the generator and discriminator progressively. A GAN (Generative Adversioral Network) is two neural networks working against each other. One creates an image, the other detects if it real or fake. If fake, it is rejected and it generates a new image until it passes the test. The idea is to build a system that generates images as real as possible without human intervention (or as little as possible).

One network is trained using real images (the discriminator) while the other learns from it rejections after being seeded with starting randomized images. In this case, Nvidia used celebrity faces. The use of GAN to generate images is rather new (2014) and outside of research, has not yet seen commercial development. Partly because it is time-consuming, even at computer speed, it takes many iterations before getting a result and partly because it is no perfectly accurate, yet . But this paper shows that we are really not far.

The person in the image above doesn’t exist. The photo itself was not taken on a camera by a photographer. It is entirely computer generated. Because the discriminator was trained with celebrity pictures, it looks like a celebrity. It is a 1024 by 1024 pixel image that could be used in any publication in the world.

The implications here is that computers will soon be able to create fully convincing images of anything without any human intervention, besides maybe inputting the subject to be created. No more cameras, no more sensors, no more Photoshop, no more photographers. The first obvious victims, besides photographers, will be stock photo agencies like Getty Images or Shutterstock whose current model is to license existing images to marketers and publishers worldwide. As solutions like these become affordable, there will be no need to take an image. Rather, we will ask for a computer to build it. The second victims are all the product shot photography. Brands will be able to ask a computer to build an image of their products, even if the product is not yet finished, and put it in any type of environment. And finally, photojournalism will become less and less credible as invented events will be indiscernible to real ones. In other words, photography as we know it will disappear.

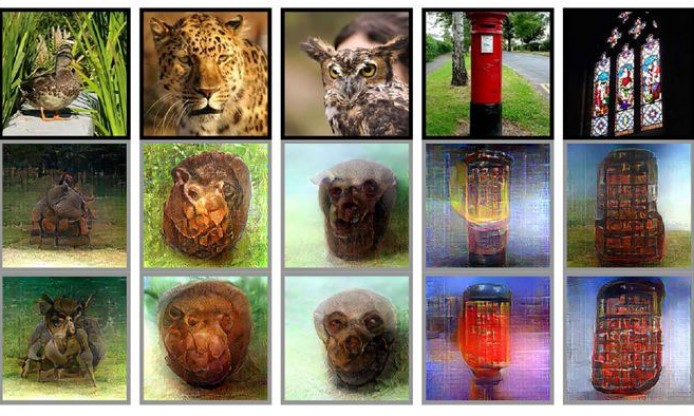

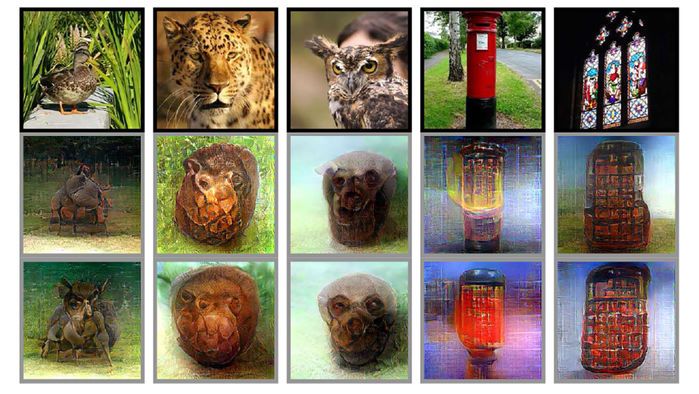

The second research paper is important as it ties into this first one. Scientists at Purdue University in Indiana used a magnetic resonance imaging device to measure blood flow to the brain as a proxy for neural activity while three people watched 1,000 images several times each. The idea was to map brain activity while they were looking at the images. For example, see what brain activity was happening when a subject was looking at an image of a butterfly.

They proceeded in applying information to train a Deep Neural Network they than used as a template. Using this template and an algorithm that had been trained to generate realistic images based on its input, it was able to guess and represent an image a person was viewing based on neural activity, 99% of the time. When tested on images a person was thinking about, it didn’t perform as well, reaching 83% for shapes.

While the process and the results here need a lot more development, we see the seeds of how, in the future, we could just think about a photo and have a computer recreate it. Besides removing any physical interface (no keyboard or voice control), it would also open unknown creative possibilities. On the flip side, it might become a troubling privacy issue as computers will also be able to see what your mind sees (and ultimately read memories as well?).

Both research point to a near future where the physical process to create an image will no longer exist. Not only camera equipment and workflow will no longer be necessary, we will not even need to be physically present. Landscape photography will be created from remote locations by people who have never been there while e-commerce retailers will produce entire catalogs in a few hours. Not only will we be able to use our own eyes as cameras, reproducing what we see (or saw, or imagine), we will also be able to create perfect pictures each time. We will also be incapable of distinguishing what is true or not. And if we don’t trust it, why use it?

Read on Kapture.co